静态telemetry

1.功能描述

1.1 什么是OpenTelemetry?

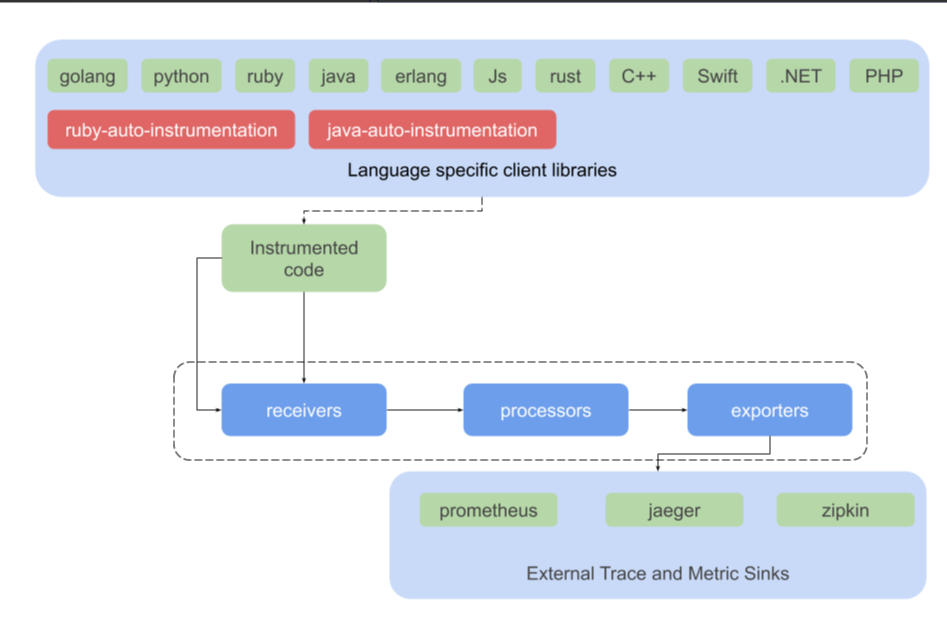

OpenTelemetry合并了OpenTracing和OpenCensus项目,提供了一组API和库来标准化遥测数据的采集和传输。OpenTelemetry提供了一个安全,厂商中立的工具,这样就可以按照需要将数据发往不同的后端。

OpenTelemetry项目由如下组件构成:

- 推动在所有项目中使用一致的规范

- 基于规范的,包含接口和实现的APIs

- 不同语言的SDK(APIs的实现),如 Java, Python, Go, Erlang等

- Exporters:可以将数据发往一个选择的后端

- Collectors:厂商中立的实现,用于处理和导出遥测数据

1.2 术语

- Traces:记录经过分布式系统的请求活动,一个trace是spans的有向无环图

- Spans:一个trace中表示一个命名的,基于时间的操作。Spans嵌套形成trace树。每个trace包含一个根span,描述了端到端的延迟,其子操作也可能拥有一个或多个子spans。

- Metrics:在运行时捕获的关于服务的原始度量数据。Opentelemetry定义的metric instruments(指标工具)如下。Observer支持通过异步API来采集数据,每个采集间隔采集一个数据。

- Context:一个span包含一个span context,它是一个全局唯一的标识,表示每个span所属的唯一的请求,以及跨服务边界转移trace信息所需的数据。OpenTelemetry 也支持correlation context,它可以包含用户定义的属性。correlation context不是必要的,组件可以选择不携带和存储该信息。

- Context propagation:表示在不同的服务之间传递上下文信息,通常通过HTTP首部。Context propagation 是 OpenTelemetry 系统的关键功能之一。除了tracing之外,还有一些有趣的用法,如,执行A/B测试。OpenTelemetry支持通过多个协议的Context propagation来避免可能发生的问题,但需要注意的是,在自己的应用中最好使用单一的方法。

1.3 OpenTelemetry架构

opentelemetry也是个插件式的架构,针对不同的开发语言会有相应的Client组件,叫Instrumenttation,也就是在代码中埋点调用的api/sdk采集telemetry数据

NJet主要集成了分布式服务 telemetry 模块:

分布式服务追踪模块: njt_otel_module.so

2.指令介绍

2.1 分布式服务追踪模块: njt_otel_module.so

opentelemetry

启用或禁用OpenTelemetry (默认为启用)。

- required:

false - syntax:

opentelemetry on|off - block:

http,server,location

opentelemetry_trust_incoming_spans

启用或禁用使用传入请求的spans作为创建请求的父级。(默认值: 启用)。

- required:

false - syntax:

opentelemetry_trust_incoming_spans on|off - block:

http,server,location

opentelemetry_attribute

向 span 添加自定义属性,可以访问 nginx 变量, 如: opentelemetry_attribute "my.user.agent" "$http_user_agent".

- required:

false - syntax:

opentelemetry_attribute <key> <value> - block:

http,server,location

opentelemetry_config

Exporters, processors

- required:

true - syntax:

opentelemetry_config /path/to/config.toml - block:

http

opentelemetry_operation_name

在启动新的 span 时设置操作名称。

- required:

false - syntax:

opentelemetry_operation_name <name> - block:

http,server,location

opentelemetry_propagate

启用分布式跟踪头的传播, e.g. traceparent。

当没有给出父跟踪时,将启动新的跟踪。默认的传播器是 W3C。

应用的继承规则与proxy_set_header相同,这意味着当且仅当在较低的配置级别上没有定义proxy_set_header 指令时,才在当前配置级别应用该指令。

- required:

false - syntax:

opentelemetry_propagateoropentelemetry_propagate b3 - block:

http,server,location

opentelemetry_capture_headers

允许捕获请求和响应头(默认值: 禁用)。

- required:

false - syntax:

opentelemetry_capture_headers on|off - block:

http,server,location

opentelemetry_sensitive_header_names

对于名称匹配给定正则表达式(不区分大小写)的所有头,将捕获的头值设置为[REDACTED]。

- required:

false - syntax:

opentelemetry_sensitive_header_names <regex> - block:

http,server,location

opentelemetry_sensitive_header_values

将捕获的头值设置为[REDACTED],用于所有与给定正则表达式匹配的头(不区分大小写)。

- required:

false - syntax:

opentelemetry_sensitive_header_values <regex> - block:

http,server,location

opentelemetry_ignore_paths

不会为匹配给定正则表达式的 URI 创建 span (不区分大小写)。

- required:

false - syntax:

opentelemetry_ignore_paths <regex> - block:

http,server,location

2.2 服务内部模块间追踪: njt_otel_webserver_module.so

| Configuration Directives | Default Values | Remarks | |

|---|---|---|---|

| NjetModuleEnabled | ON | OPTIONAL: Needed for instrumenting Nginx Webserver | 启动开关 |

| NjetModuleOtelSpanExporter | otlp | OPTIONAL: Specify the span exporter to be used. Supported values are “otlp” and “ostream”. All other supported values would be added in future. | 默认otlp即可; [otlp / ostream] |

| NjetModuleOtelExporterEndpoint: | REQUIRED: The endpoint otel exporter exports to. Example “docker.for.mac.localhost:4317” | 需要:collector 地址 | |

| NjetModuleServiceName | REQUIRED: A namespace for the ServiceName | 需要: service name | |

| NjetModuleServiceNamespace | REQUIRED: Logical name of the service | 需要:逻辑上name | |

| NjetModuleServiceInstanceId | REQUIRED: The string ID of the service instance | 需要: 服务实例名称 | |

| NjetModuleTraceAsInfo | OPTIONAL: Trace level for logging to Apache log | 日志级别(ON 打印info日志, OFF关闭打印日志) |

3.配置说明

3.1 分布式服务追踪模块: njt_otel_module.so

Njet.conf 配置

#user root;

worker_processes 1;

daemon off;

#master_process on;

error_log /home/njet/clbtest/logs/error.log debug;

pid /home/njet/clbtest/njet.pid;

load_module /home/njet/modules/njt_otel_module.so;

events {

worker_connections 1024;

}

http {

opentelemetry_config /home/njet/clbtest/conf/otel-njet.toml;

opentelemetry off;

upstream http1{

server 192.168.40.139:9002;

#server 192.168.40.136:8082;

}

server {

listen 8081;

server_name otel_example;

location = / {

opentelemetry on;

opentelemetry_operation_name my_example_backend;

opentelemetry_propagate;

proxy_pass http://http1;

}

location = /b3 {

opentelemetry on;

opentelemetry_operation_name my_other_backend;

opentelemetry_propagate b3;

# Adds a custom attribute to the span

opentelemetry_attribute "req.time" "$msec";

proxy_pass http://http1;

}

}

}Otel module 配置

exporter = "otlp"

processor = "batch"

[exporters.otlp]

#collector server address

# Alternatively the OTEL_EXPORTER_OTLP_ENDPOINT environment variable can also be used.

host = "192.168.40.136"

port = 4317

# Optional: enable SSL, for endpoints that support it

# use_ssl = true

# Optional: set a filesystem path to a pem file to be used for SSL encryption

# (when use_ssl = true)

# ssl_cert_path = "/path/to/cert.pem"

[processors.batch]

max_queue_size = 2048

schedule_delay_millis = 5000

max_export_batch_size = 512

[service]

# Can also be set by the OTEL_SERVICE_NAME environment variable.

name = "njet-proxy" # Opentelemetry resource name

[sampler]

name = "AlwaysOn" # Also: AlwaysOff, TraceIdRatioBased

ratio = 0.1

parent_based = false

3.2 服务内部模块间追踪: njt_otel_webserver_module.so

njet.conf:

user nginx;

worker_processes 1;

error_log /home/njet/clbtest/logs/error.log warn;

pid /home/njet/clbtest/nginx.pid;

load_module /home/njet/clbtest/newlib/njt_otel_webserver_module.so;

load_module modules/njt_otel_webserver_module.so;

load_module modules/njt_agent_dyn_otel_webserver_module.so;

events {

worker_connections 1024;

}

http {

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

include /home/njet/clbtest/webserver_conf/opentelemetry_module.conf;

upstream http1{

server 192.168.40.108:8082;

}

upstream http2{

server 192.168.40.139:9002;

#server 192.168.40.136:8082;

}

server {

listen 8081;

server_name otel_example;

location = / {

proxy_pass http://http1;

}

}

server {

listen 8082;

server_name otel_example;

location = / {

proxy_pass http://http2;

}

}

}Otel webserver module 配置 (opentelemetry_module.conf)

NjetModuleEnabled OFF;

NjetModuleOtelSpanExporter otlp;

NjetModuleOtelExporterEndpoint 192.168.40.136:4317; #改成自己的ip,端口无需更改

NjetModuleServiceName DemoService;

NjetModuleServiceNamespace DemoServiceNamespace;

NjetModuleServiceInstanceId DemoInstanceId;

NjetModuleResolveBackends ON; #固定值,不做修改

NjetModuleTraceAsInfo ON; 3.3 启动collector以及jaeger服务

通过docker-compose 启动collector 以及jaeger服务

若没有docker-compose,需先下载:

yum install docker-compose然后执行:

docker-compose -f docker-compose.yaml up -d

docker-compose -f docker-compose.yaml down #停掉jagerdocker-compose.yaml

version: "2"

services:

# Jaeger

jaeger-all-in-one:

image: jaegertracing/all-in-one:latest

restart: always

ports:

- "16686:16686"

- "14268"

- "14250"

# Zipkin

zipkin-all-in-one:

image: openzipkin/zipkin:latest

restart: always

ports:

- "9411:9411"

# Collector

otel-collector:

image: otel/opentelemetry-collector:0.67.0

restart: always

command: ["--config=/etc/otel-collector-config.yaml", "${OTELCOL_ARGS}"]

volumes:

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

ports:

- "1888:1888" # pprof extension

- "8888:8888" # Prometheus metrics exposed by the collector

- "8889:8889" # Prometheus exporter metrics

- "13133:13133" # health_check extension

- "4317:4317" # OTLP gRPC receiver

- "4318:4318" # OTLP http receiver

- "55679:55679" # zpages extension

depends_on:

- jaeger-all-in-one

- zipkin-all-in-one

#demo-client:

# build:

# dockerfile: Dockerfile

# context: ./client

# restart: always

# environment:

# - OTEL_EXPORTER_OTLP_ENDPOINT=otel-collector:4317

# - DEMO_SERVER_ENDPOINT=http://demo-server:7080/hello

# depends_on:

# - demo-server

#demo-server:

# build:

# dockerfile: Dockerfile

# context: ./server

# restart: always

# environment:

# - OTEL_EXPORTER_OTLP_ENDPOINT=otel-collector:4317

# ports:

# - "7080"

# depends_on:

# - otel-collector

#prometheus:

# container_name: prometheus

# image: prom/prometheus:latest

# restart: always

# volumes:

# - ./prometheus.yaml:/etc/prometheus/prometheus.yml

# ports:

# - "9090:9090"otel-collector-config.yaml

receivers:

otlp:

protocols:

grpc:

http:

exporters:

prometheus:

endpoint: "0.0.0.0:8889"

const_labels:

label1: value1

logging:

zipkin:

endpoint: "http://zipkin-all-in-one:9411/api/v2/spans"

format: proto

jaeger:

endpoint: jaeger-all-in-one:14250

tls:

insecure: true

processors:

batch:

extensions:

health_check:

pprof:

endpoint: :1888

zpages:

endpoint: :55679

service:

extensions: [pprof, zpages, health_check]

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [logging, zipkin, jaeger]

metrics:

receivers: [otlp]

processors: [batch]

exporters: [logging, prometheus]opentelemetry_sdk_log4cxx.xml(log 配置文件)

同其他NJET 配置文件路径一致

<?xml version="1.0" encoding="UTF-8" ?>

<log4j:configuration xmlns:log4j="http://jakarta.apache.org/log4j/" debug="false">

<appender name="main" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d{yyyy-MM-dd HH:mm:ss.SSS z} %-5p %X{pid}[%t] [%c{2}] %m%n" />

<param name="HeaderPattern" value="Opentelemetry Webserver %X{version} %X{pid}%n" />

</layout>

</appender>

<appender name="api" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d{yyyy-MM-dd HH:mm:ss.SSS z} %-5p %X{pid} [%t] [%c{2}] %m%n" />

<param name="HeaderPattern" value="Opentelemetry Webserver %X{version} %X{pid}%n" />

</layout>

</appender>

<appender name="api_user" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d{yyyy-MM-dd HH:mm:ss.SSS z} %-5p %X{pid} [%t] [%c{2}] %m%n" />

<param name="HeaderPattern" value="Opentelemetry Webserver %X{version} %X{pid}%n" />

</layout>

</appender>

<logger name="api" additivity="false">

<level value="info"/>

<appender-ref ref="api"/>

</logger>

<logger name="api_user" additivity="false">

<level value="info"/>

<appender-ref ref="api_user"/>

</logger>

<root>

<priority value="info" />

<appender-ref ref="main"/>

</root>

</log4j:configuration>4.效果

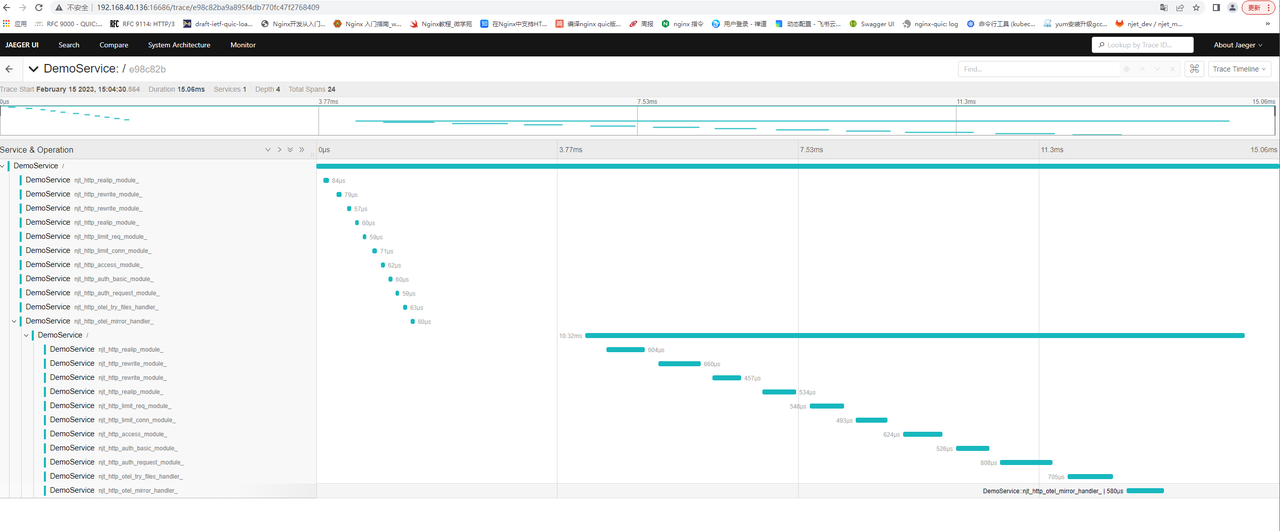

4.1 分布式服务追踪模块: njt_otel_module.so

Jaeger ui:http://{jaeger server ip}:16686

通过jaeger ui 查看trace

http://192.168.40.136:16686